By Ella Feathers, with the input and technical expertise of Angel Hsu and Zhi Yi Yeo

By Ella Feathers, with the input and technical expertise of Angel Hsu and Zhi Yi Yeo

Machine learning (ML) and artificial intelligence (AI) applications are dominating headlines and public discourse for the threats and opportunities they pose to society. These technologies can be misused, with applications like generating deepfakes and fabricating information. They have also garnered criticism for perpetuating racist and sexist biases. However, there are a myriad of positive use cases as well, like providing feedback on written drafts, debugging code, and automating tedious processes. With so many ‘what ifs’ and possible paths forward, many worry about how to effectively and ethically use these new tools that have been trained on vast expanses of text and data from the Internet.

AI is a rapidly evolving technology that is still in relative infancy. Researchers, politicians, and the public voice concerns about its potential for racial profiling and eliminating jobs. However, there are many possibilities for socially beneficial uses of this technology when it is understood and implemented with care.

DDL is actively researching what advances in AI and ML will mean for tracking climate change commitments. As the number of companies, financial institutions, and subnational governments setting climate commitments continues to grow, AI can be used to facilitate data collection and make climate data sets interoperable. AI applications are particularly promising in the field of natural language processing (NLP) and large language models (LLMs), which power platforms like ChatGPT. Because climate change information is frequently formatted as unstructured text, these approaches can help us understand and compare how different entities are planning to tackle climate change. In this blog, we provide an overview of how we’re utilizing AI and ML in our work evaluating net-zero pledges.

Making Sense of Alphabet Soup: AI, ML, NLP & LLMs

To understand how these technologies can improve tracking net-zero commitments, it is crucial to understand what the terms AI and ML actually mean, as well as NLP and LLM techniques.

In a nutshell, AI describes technology that attempts to replicate human decision-making processes. This includes everything from facial recognition to search engine optimization to chatbots. ML is a subcategory of AI focused on building algorithms that can generate trends and insights from data by learning, rather than having a researcher select which statistical models and tests to conduct.

NLP is an AI/ML technique where computers dissect large bodies of text, or natural language. NLP models facilitate algorithms in recognizing patterns within written or spoken language, enabling the identification of patterns within texts and how words relate to each other within a sentence, paragraph, or body of text. These methods are powerful when a researcher has a large volume of unstructured textual data, such as political speeches, social media posts, or policy documents, where it would be too onerous to manually inspect each document and search for patterns. NLP algorithms attempt to replicate a human process of mining a text for valuable information, and they can also discover “unseen” patterns in text that a researcher may not have known about a priori. NLP algorithms power a lot of the underlying technology of search engines. For example, when you conduct a search to find bean casserole recipes, NLP models analyze your query and the content of web pages to find results that are most similar to what you are interested in finding.

LLMs – the NLP technology powering popular chatbots like ChatGPT – are a subcategory of machine learning models that have been trained on massive quantities of text aimed to develop an understanding of how language works. These models are typically designed to serve various functions, including recognizing new text, summarizing blocks of content, translating text across different languages, and producing written responses when prompted with questions or statements.

Efficiency Boost: Automating Data Collection for the Net Zero Tracker

DDL partners with the Energy & Climate Intelligence Unit (ECIU), NewClimate Institute, and Oxford Net Zero to build the Net Zero Tracker, which aims to create a comprehensive register of net zero and ambitious climate pledges made by nations, states and regions, cities and major companies.

The NZT collects data on 4,000+ entities from a wide variety of sources, including disclosure reports to the CDP, climate policy documents, and corporate social responsibility reports. To date, more than 250 student volunteers have aided in the data collection and extraction process, which involves sifting through these various documents to extract targets and then scoring their transparency and completeness according to a codebook developed by the NZT consortium.

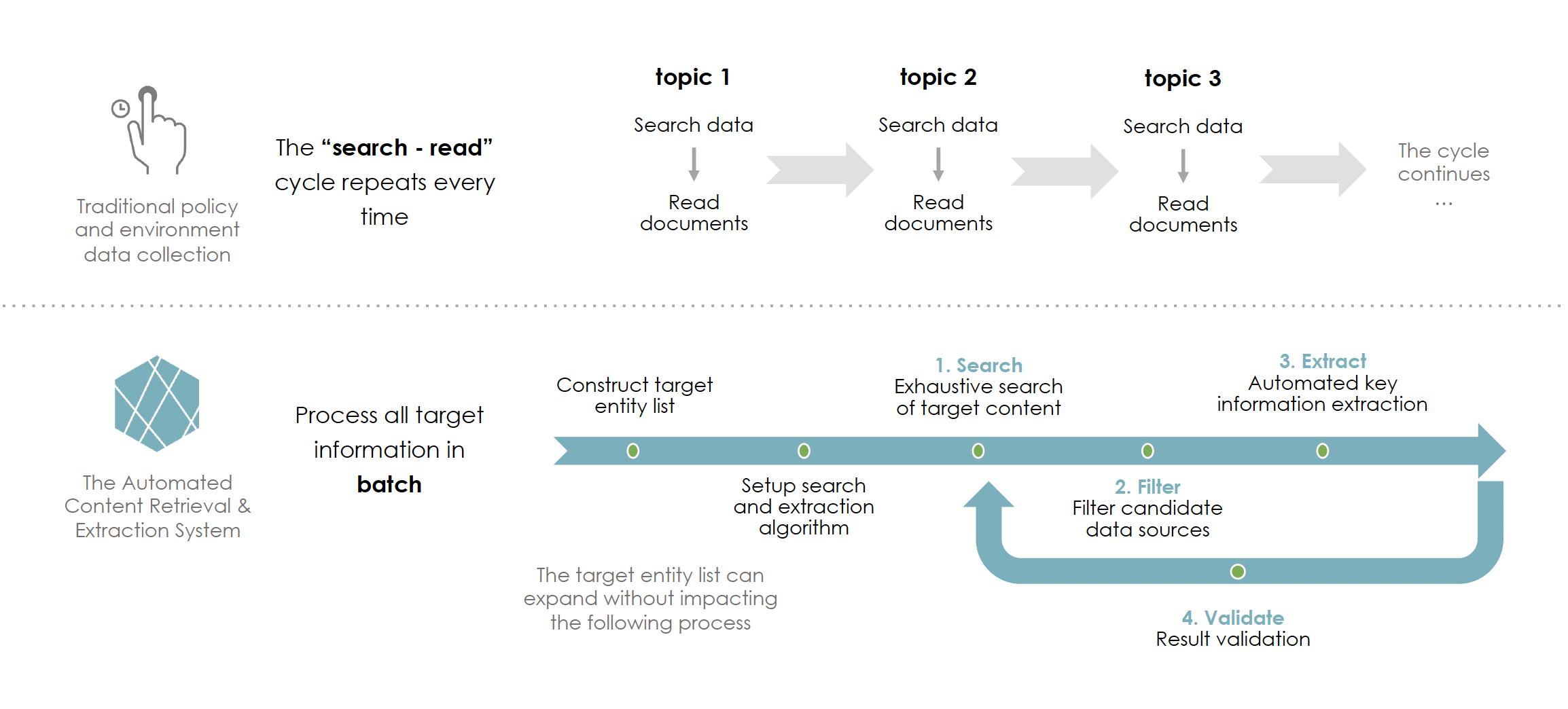

As reports show that the need for climate action is more urgent than ever, companies, cities, and regions are rushing to establish net-zero commitments, making the process of maintaining and updating NZT challenging. Recognizing the potential to automate the manual data collection and extraction process currently executed by humans, DDL enlisted Arboretica to pilot an AI model that would be able to scour the Internet for data on net-zero targets. Supported by the Climateworks Foundation, we branded this project ‘Net-Zero HERO: High-Performance Extraction and Retrieval Operation’, to explore how machine learning techniques can increase the efficiency and scalability of data collection for the Tracker. By streamlining the time-consuming process of searching and analyzing net-zero documents, the NZT can be a powerful tool in the public and policymakers’ arsenal to better track and scrutinize in real-time net-zero developments.

HERO systematically collects data from documents published on the Internet that mention a net-zero target. Using ML techniques and building on existing NLP models, HERO also conducts key checks to ensure that the data collected is reliable, accurate, and relevant. Human volunteers then only need to validate the data’s accuracy.

This flowchart demonstrates how NZT HERO leverages AI to more quickly and efficiently source, filter, extract, and analyze global net-zero commitments.

The pilot algorithm for HERO has dramatically increased the speed of data collection, reducing the manual labor hours required by 200-300%. In this pursuit, HERO has achieved greater than 50% accuracy for 5 of the 6 types of data it collects, and more than 90% accuracy for two of those categories.

With ever-increasing new net-zero commitments being pledged, the climate tracking and accountability space requires algorithmic solutions to keep up with the rapidly growing information ecosystem. Improvements in HERO reduce the need for manual data collection and make it easier and faster to collect more data about entities’ net-zero commitments.

HERO’s Future – ChatNetZero

The rapidly growing field of AI is burgeoning with opportunities for scientific advancements that will drive climate progress forward.

In the past year, we’ve improved the Net Zero Tracker HERO algorithm by altering it to collect more variables to detect finer trends and anomalies in net-zero commitments. These improvements have allowed us to conduct more detailed analyses, such as determining whether a company in one industry sector is more likely than another to commit to an ambitious net-zero goal.

Our next steps for the NZT HERO project include expanding our data search and collection algorithms to include other languages and launching ChatNetZero, the world’s first Net Zero AI chatbot. Trained with data from the Net Zero Tracker, ChatNetZero is a large language model (LLM)-based chatbot that can competently analyze unstructured net-zero related text documents and serve as a question-answering platform to decipher the credibility of business, government, or financial institutions’ decarbonization plans. Given the volume of “greenwashing” associated with many entities’ net-zero pledges, ChatNetZero will be a powerful tool to combat growing misinformation.

These AI-driven improvements to the Tracker demonstrate essential progress toward the ideal, fully-realized use case of AI in this context: continuous collection of net-zero commitments that allows for accountability and emissions tracking in real time. Interactive access to real-time data would promote transparency, hold actors accountable to their targets and prompt actors to set more ambitious climate goals than their peers, initiating a “race to the top” of decarbonisation.

It’s essential to understand the positive, scalable impacts of tools like AI, ML, and NLP alongside the ‘buzz’ generated by chatbots. Effective utilization of AI technologies is crucial to accurately showing what climate progress we have made so far, and where we need to go from here.

We are currently seeking partners and funders for ChatNetZero.ai. If interested, please get in touch.

Recent Comments